SHOW NOTES

Podcast Recorded on January 12th, 2024 (Weather-dependent)

HOSTS

AB – Andrew Ballard

Spatial AI Specialist at Leidos.

Robotics & AI defence research.

Creator of SPAITIAL

Helena Merschdorf

Marketing/branding at Tales Consulting.

Undertaking her PhD in Geoinformatics & GIScience.

Mirek Burkon

CEO at Phantom Cybernetics.

Creator of Augmented Robotality AR-OS.

Violet Whitney

Adj. Prof. at U.Mich

Spatial AI insights on Medium.

Co-founder of Spatial Pixel.

William Martin

Director of AI at Consensys

Adj. Prof. at Columbia.

Co-founder of Spatial Pixel.

Introductions

We’re down by one William this week – we trust he is in bed, resting – and dreaming of electric sheep.

FAST FIVE – Spatial AI News of the Week

From Violet:

Ballie: the AI-powered robot from Samsung

Is it a projector on wheels? Is it a battery-powered tennis ball? What can it interact with – and can dogs see projected images, anyway? Only time will tell…

https://www.designboom.com/technology/samsung-ballie-ai-robot-ces-2024-01-09-2024/

From Helena:

Volkswagon + ChatGPT = Your own personalised voice assistant?

Direct from CES, the Consumer Electronics Show in Las Vegas, Volkswagon is announcing a new partnership with OpenAI to put ChatGPT into their new car interfaces – starting as soon as Q2 2024.

What are the potential use cases? Tour guides en route / at destination – or (yikes!) – replacing podcasts with location-specific historic stories along your way?

From Mirek:

Insight: Brian Schwab, Director of Interaction Design at the Lego Group – “XR is not what you think”

“The wondrous truth of wearable XR is that it’s NOT about visuals. In all my years of XR work, the best examples had only one thing in common: absolutely minimal pixels.

Its not about screens everywhere, that is what’s known as a transition marketing term used to get folks to see the immediate understandable use. Its low hanging fruit in the most meanings possible.

XR tech is actually about human native inputs. It’s being able to sample headpose and eye vectors and hands DIRECTLY, in a low latency manner while the user is in flow. Not through an abstract interaction or a drop down interface.

This means you can lower the amount of visual feedback necessary in the past because of the terrible mismatch to humanity.

There’s a reason Apple called their device Vision Pro and not Visuals Pro.

You will know folks have finally found their XR sea legs when you use an application and you realize it couldn’t possibly be done with a mouse and keyboard. Or a pile of shortcuts. Or a controller.

XR isn’t dead, it’s barely fetal. It’s worming its way into the brains of the creators out there. True change takes time, most especially in the actual experts that need a while to work their way around all the decades of experience that solidified the glory of screen optimized experiences.

Its coming. Open your head, eyes, and hands…and try to see new ways using old tools.”

https://www.linkedin.com/feed/update/urn:li:activity:7149741318913630209/

From AB:

Three new text-to-3D-model tools:

Luma’s Genie – for high-fidelity/high-polygon count 3D objects from your text input:

https://techcrunch.com/2024/01/09/luma-raises-43m-to-build-ai-that-crafts-3d-models/

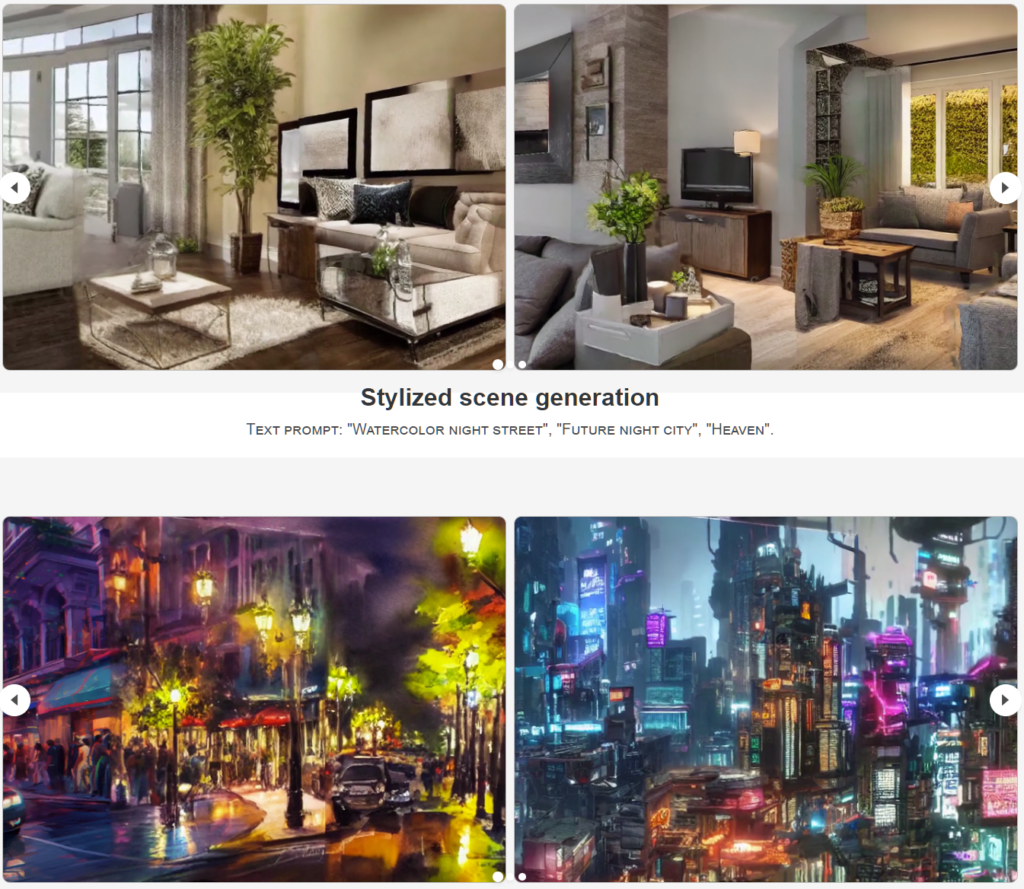

Text2immersion – using Gaussian Splats (GaSPs) to make a… 2.9D? not-quite-3D scene that the user’s camera sits firmly in the middle of. Movement is limited to the early NeRF/GaSP small movements around a centra lpoint, so there’s no ‘exploration’ to speka of just yet. This would be brilliant for a new genre of text-based games, though: Go North. Open Chest. Use Axe. etc etc.

https://ken-ouyang.github.io/text2immersion/

And finally, En3D: a tool for making rigged human 3D models for future metaverse avatars – from either zero-shot single images of existing people – or to more slowly build up your avatar from text input.

Definitely missing out on a lot of depth detail in many places, moreso when using the zero-shot mode – but a great leap towards simply descripding what you want.

https://menyifang.github.io/projects/En3D/index.html

All three tools suffer from ‘not-quite-there-yet’ syndrome, but similarly to using the new breed of LLM tools, these should all be considered as input into a further steps of refinement. The Luma Genie tool has the most potential, given it can output to fully enclosed and completely arbitrary meshes – and at the other end of the scale, Text2immersion’s gaussian splatting output seems to not have a pathway to that task of further refinement. Yet.

To absent friends.