Recorded on February 23rd, 2024

HOSTS

AB – Andrew Ballard

Spatial AI Specialist at Leidos.

Robotics & AI defence research.

Creator of SPAITIAL

Helena Merschdorf

Marketing/branding at Tales Consulting.

Undertaking her PhD in Geoinformatics & GIScience.

Mirek Burkon

CEO at Phantom Cybernetics.

Creator of Augmented Robotality AR-OS.

Violet Whitney

Adj. Prof. at U.Mich

Spatial AI insights on Medium.

Co-founder of Spatial Pixel.

William Martin

Director of AI at Consensys

Adj. Prof. at Columbia.

Co-founder of Spatial Pixel.

FAST FIVE – Spatial AI News of the Week

From Violet:

SoundxVision’s “The Ring” as a minimalistic spatial input device

From this Finnish hardware/software design group comes a tantalising preview of “The Ring” – a prototype device with finger pressure sensing, so that the thumb-mounted controller “becomes a creative tool for the world canvas”.

From William:

Blog post/research video from Runway, introducing the concept of a spatially-aware ‘General World Model’

A prescient white paper/video coining the term ‘General World Model’ for a spatially-aware multi-modal large model… whew – quite the definition, but essentially what we at SPAITIAL are commonly referring to as the next iteration of text–>images–>video–>spatially-aware large models.

Will the term and acronym (‘GWMs’) catch on? Time will tell. the concept is rock-solid. The marketing… still needs some thought.

Watch along, however, and stay tuned as we review Runway’s efforts in future episodes, in light of their new catchphrase.

https://research.runwayml.com/introducing-general-world-models

From AB:

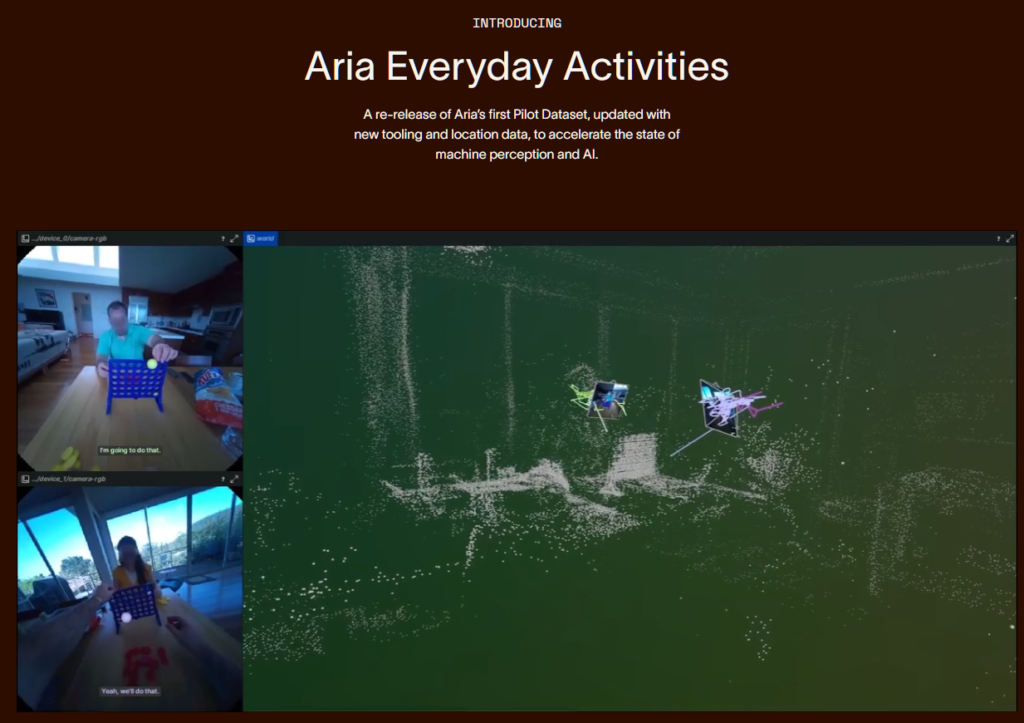

Meta’s ‘Aria’ – an egocentric and spatially-rich dataset – gets a huge update

Aria is a dataset for researchers and spatial hackers alike, that just had a major uplift in both volume of data captured – and more importantly: richness of dataset including new data types and annotations.

Starting from the point of view of ‘me’, this dataset follows one or two people through various everyday activities – but at each millisecond, capturing: images, video, sound, positioning, gaze tracking, object detection – as well as the surrounding point clouds of the space the person is in, and their movements within in.

Why all the fuss? some commentators suggest that this kind of dataset is perfect for training your very own ‘Jarvis’ – an AI that knows *you*. More neutrally, though – this dataset should breed a new range of understanding and embeddings around the ways humans navigate / where they look / how they validate their actions / and the differences between intent and actual movements.

https://www.projectaria.com/datasets/aea/

To absent friends.