From Mirek:

It’s uncanny – and yet I can’t look away.

From Figure, in collaboration with OpenAI, we get a vision of the future called Figure 01… if that future has both some minor pauses due to latency AND an overly-humanistic set of filler/padding ‘ums’ and ‘well…’s…

All minor mocking aside, this is a radical fusion of sensors and capabilities, mixed in with natural speech inputs and outputs – and with a side-order of explainability: the robot can simultaneously explain what it’s doing while (or before!) it performs those actions.

Sign me up.

https://www.youtube.com/watch?v=Sq1QZB5baNw

From William:

Google Earth Studio

Devin’s Capabilities: With our advances in long-term reasoning and planning, Devin can plan and execute complex engineering tasks requiring thousands of decisions. Devin can recall relevant context at every step, learn over time, and fix mistakes.

We’ve also equipped Devin with common developer tools including the shell, code editor, and browser within a sandboxed compute environment—everything a human would need to do their work.

Finally, we’ve given Devin the ability to actively collaborate with the user. Devin reports on its progress in real time, accepts feedback, and works together with you through design choices as needed.

https://www.cognition-labs.com/ YouTube Announcement

From Violet:

Shaper Origin: the router you didn’t know you needed

Bringing back the feel of a hand tool with the precision and repeatability of a CAD/CAM/3D-printer – Shaper’s Origin router has it all. Watch the video to see how it allows for predetermined shapes and patterns, but across any size object. We can’t wait to a) get our hands on one, and b) see what other hand tools can turn into smart assistants!

https://www.shapertools.com/en-us/origin

From AB:

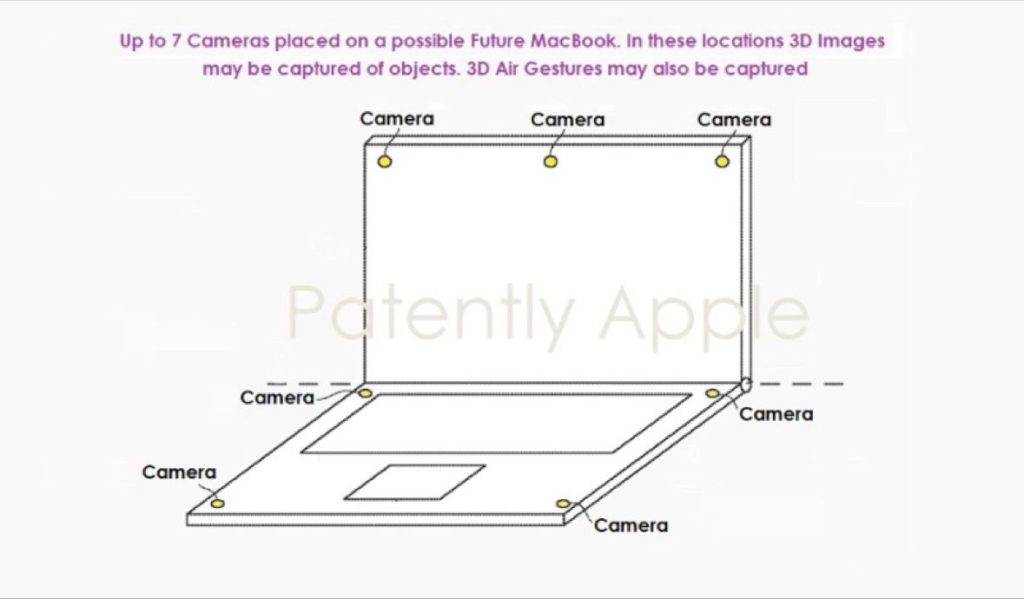

Apple’s new Patent: a 7-camera spatially-aware laptop!

While patents aren’t worth the paper they’re not written on anymore, they do give a glimpse into what a company is thinking about – in this case, Apple, and in this case: if your new MacBook Pro had up to 7 cameras arranged around the corners, what could you do with that setup?

Jeremy Dalton’s post on LinkedIn started a great discussion about the potential implications and future uses. Simple ones like using the cameras to capture small objects via photogrammetry, sure – but how about tracking eye movements and hands/finger movements, to bring the VisionOS gesture commands to a ‘regular’ laptop?

Other commenters have also suggested that it may bring a pseudo-stereoscopic 3D avatar into Facetime calls, for crossover potential with Vision Pro users, and invite more people to collaborate in the spatial world – although non-immersive.

Jeremy Dalton’s Post on LinkedIn

HOSTS

AB – Andrew Ballard

Spatial AI Specialist at Leidos.

Robotics & AI defence research.

Creator of SPAITIAL

Helena Merschdorf

Geospatial Marketing/Branding at Tales Consulting.

Undertaking her PhD in Geoinformatics & GIScience.

Mirek Burkon

CEO at Phantom Cybernetics.

Creator of Augmented Robotality AR-OS.

Violet Whitney

Adj. Prof. at U.Mich

Spatial AI insights on Medium.

Co-founder of Spatial Pixel.

William Martin

Director of AI at Consensys

Adj. Prof. at Columbia.

Co-founder of Spatial Pixel.

To absent friends.