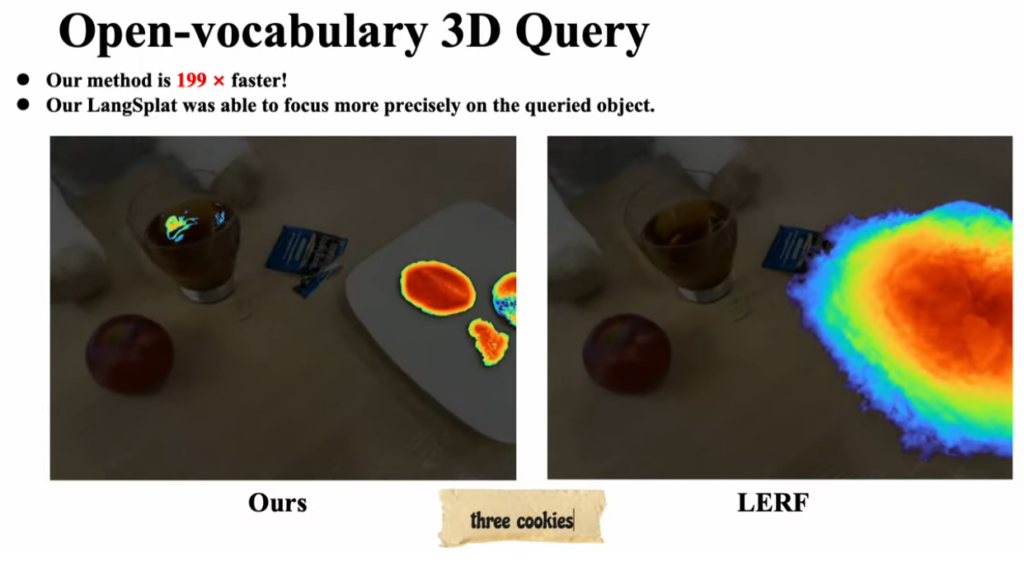

New from researchers at Tsinghua University and Harvard University comes LangSplat – a method of integrating a natural language search capability over a segmented NeRF – some 200 times faster and orders of magnitude more accurate than its predecessor.

That’s a seriously deep sentence. Let’s break it down from back to front:

- NeRFs are Neural Radiance Fields: AI-powered ‘structure from motion’, able to recreate 3D scenes from 2D camera inputs, on the fly. Relatively new, emerging in early 2023.

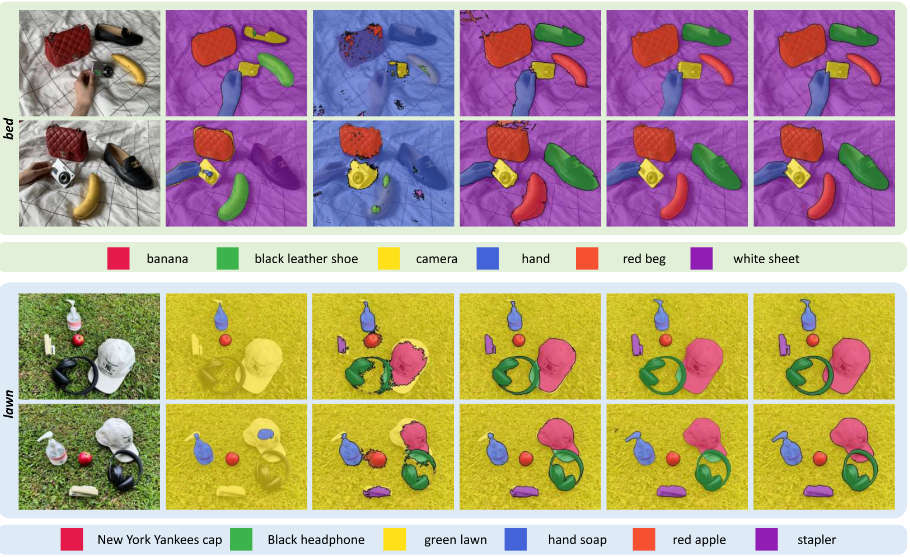

- Segmented NeRFs are a software feature on top of a regular NeRFs scene, whereby discrete objects’ edges/volumes can be distinctly separated into segments – which hold firm even when the virtual camera is looking from previously uncaptured viewpoints.

- NLP is natural laguage processing, asking questions in ways similar to the spoken word. In instance, LangSplat can not only query the segmented portions of the NeRF, but it can also determine a wide vocabulary of objects – more than the standard table/chair/person level of detail.

The project GitHub page has links to a series of YouTube videos, showing in details how specific queries for rather obtuse objects within a scene can be identified, with their edges clearly defined:

If 2023 was the year of BOTH Large Language Models AND of the rise of NeRFs and GaSPs to overrun traditional photogrammetry – then 2024 looks to be the year of LLMS AND fully interperetable NeRFs, as a new mode of exploring spatial data.